Research Article - (2022) Volume 15, Issue 4

Received: 02-Apr-2022, Manuscript No. jcsb-22-53532;

Editor assigned: 04-Apr-2022, Pre QC No. P-53532;

Reviewed: 18-Apr-2022, QC No. Q-53532;

Revised: 23-Apr-2022, Manuscript No. R-53532;

Published:

30-Apr-2022

, DOI: 10.37421/0974-7230.2022.15.407

Citation: Jeffrey, Arle E. and Longzhi Mei. “Computational

Investigation of Complexity and Robustness in Neural Circuits.” J Comput Sci

Syst Biol 15 (2022): 407.

Copyright: © 2022 Arle JE, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

The stability of evolutionary systems is critical to their survival and reproductive success. In the face of continuous extrinsic and intrinsic stimuli, biological systems must evolve to perform robustly within sub-regions of parameter and trajectory spaces that are often astronomical in magnitude, characterized as homeostasis over a century ago in medicine and later in cybernetics and control theory. Various evolutionary design strategies for robustness have evolved and are conserved across species, such as redundancy, modularity, and hierarchy. We investigate the hypothesis that a strategy for robustness is in evolving neural circuitry network components and topology such that increasing the number of components results in greater system stability. As measured by a center of maximum curvature method related to firing rates, the transition of the neural circuitry systems model to a robust state was ~153 network connections (network degree).

Complexity • Non-linear • Dynamical systems • Connectome • Neural circuit • Stability • Robustness • Resilience • Systems biology • Functional connectivity

To survive and reproduce, biological systems have evolved high levels of resilience to perturbation, also referred to as ‘robustness’ the ability to maintain functionality within astronomically large parameter spaces [1,2]. Bernard C, [3] best known for deep philosophical observations on the proper conduct of biological science and inventing ‘blind’ experimental methods, also played a significant role in developing the concept of homeostasis in biological systems, later refined by Cannon WB [4]. Bernard and Cannon identified homeostasis with the living system’s tendency to maintain a ‘healthy state’ despite continuous inputs from within and without to perturb the healthy state, and if perturbed beyond a limit, to enter a diseased state. Stability and its relationship to complexity has been studied in biological systems at many levels from ecology to cellular biomolecules [5-8].

At some meta-level, such as the ‘operating system’ of the brain and spinal cord, analogous to the operating system of complex software hierarchies, neural circuits’ overall functions are stably maintained despite extensive cell and circuit ‘reformatting’ (property modulation) throughout infant, child and adult life and despite enormous variation in the dynamic range of external input. Moreover, though properties at any systems level in the nervous system may be altered due to disease or degeneracy during normal aging [9-13], even with large amounts of neural component loss or disruption, up to a certain point, general stable function is maintained. For instance, animal models predict that 80% of the dopaminergic neurons in the substantia nigra must be lost for movement disorder symptoms to appear in Parkinson’s disease [14].

One evolutionary strategy for network robustness is redundancy, as seen in von Neumann’s biologically-inspired ‘multiple line trick’ to endow automata with unlimited robustness or as is engineered into the internet’s ability to transmit a message from node A to node B under connectivity loss [15-20]. When a circuit fails with damage, circuit redundancy may permit continuation or restoration of function. Redundant circuit copies may differ from each other, which may offer the system more chance to adapt to environmental challenges. In this sense, the more complexity built into the system, the more robust its potential integrity. Modularity is a second evolutionary strategy to stabilize connectomes. It is present in conserved features across species, from C. elegans to humans, and in more complex organisms, a concentrated central network hub and smallworld topology that evolved for different reasons [2]. Hierarchical organization may be a third evolutionary strategy [21].

However, redundancy, modularity, and hierarchical organization may not be the only evolutionary strategies for robustness and may not be sufficient in all network structure classes. For instance, if each component is simple, non-complex, and not highly interconnected, when circumstances defeat one element, all may succumb, and numerous failure modes and cascades of failure in complex systems can occur. See, as the pre-eminent example, discussions of the ‘butterfly effect’ and early work that defined ‘chaotic systems’ as those wherein a small change in one local area or initial condition of a highly interconnected non-linear dynamical system eventually grows and disrupts the entire system [22,23]. Global stability in mathematically- idealized biological systems, including neural networks, has been analyzed, showing several ways to define equilibrium points in such systems and hypothesizing abstract conditions underlying homeostasis [24-29].

With all these concepts as a backdrop, we examined the hypothesis that the level of circuitry robustness may be related to the level of complexity in the model itself that, as interconnectedness and system complexity increase, system robustness increases. This hypothesized class of systems is in contradistinction to complex non-linear dynamical systems which have multiple attractor states that become less stable as the system complexity increases [30-34]. With models of neural circuitry dynamics specifically, we sought to determine whether stability and output remained even when parameters within the system varied considerably, thus addressing the often-leveled criticism of computational modeling that suggests any outcome can be ‘baked into’ the model by the modeler, if desired.

Network terminology and definitions

We draw on the increasing body of literature that applies mathematical network theory to the analysis of brain connectomics. Good references in this regard are Fornito A, et al. [35] and Sporns O [36]. The analysis presented here incorporates simplifications that deliberately differ from previous approaches, as described below. Our models derive purely from computationally efficient, scalable, neural modeling considerations, and not from generic network modeling or network theory [37,38].

Connectivity matrix

All neural groups, which can be thought of as network hubs, are connected to all other neural groups in our study paradigm, but not to themselves or within themselves, and the connectivity matrix shows all boxes checked except along the main diagonal Figure 1. Thus, the model is fully connected at the group level with connection weighting or density adjusted separately.

Figure 1. Connectivity matrix for connectome models in this study. All neural groups are connected to all other neural groups (dark boxes) but not to themselves (white boxes on the main diagonal). At a high level, the organization is in populations (p1, p2, …, pm-1, pm) corresponding conceptually to major brain centers (e.g. thalamus, basal ganglia) and groups corresponding conceptually to neural centers (or network hubs) within a major center (e.g. anterior nuclear group of the thalamus, substantia nigra, globus pallidus internal in the basal ganglia). For simplification, there are only two groups per population: one excitatory (gexc) and one inhibitory (ginh) and they are either Pre-synaptic (the source group) or Post-synaptic (the target group). No groups are selfconnected or inter-connected within a single population.

The model networks are directed

Our networks are symmetric directed graphs, i.e. each group is reciprocally connected to each other group, with the additional parameter of excitatory or inhibitory effects on the connection target Figure 2. We are not simulating brain networks that evolved to perform a given function, but rather, generic neural networks to test their stability as the number of different system level components vary, such as neuron membrane parameters, numbers of synapses, and synapse locations on dendrites that affect connection strength. Nonetheless, the goal is to simulate generic qualities of real brains.

Figure 2. Two reciprocally connected populations. Each population (circles) contains two groups, one excitatory (blue, ExcG11 and ExcG21) and one inhibitory (green, InhG12 and InhG22). Group size varies as a parameter in different experiments from 5 to 150 cells. Each pre-synaptic cell randomly projects to 40% of cells in the target group.

Approximating network density of real brains

Connection density varies anatomically in real brains but connection strength or weighting is modulated by other factors, resulting in over 5 orders of magnitude variation in effective connectivity or weighting. Thus anatomical connectivity may be over 60% but include large numbers of weak connections [35]. Further, brain connectivity weighting varies over time [36,39]. Ultimately, our goal was to model the robustness of functionally connected neural groups and so the approach taken is of effective connectivity [36]. In our model, we found that having each group randomly target 40% of the cells in its target group, given connection weighting stated below, resulted in reasonably correlated activity in all experiments.

Connection weighting

The neural circuitry software incorporates a 10-compartment electrotonic dendritic connection strength parameter used to set connection weights [37,40]. The cell body and dendritic compartments close to it carry more current to the axon hillock than do distal compartments. The weights were varied across experiments Table 1. Such a model for dendritic processing has the capability of encompassing most arbitrary dendritic tree anatomies and boutons of their synapses [37,38].

| Dendritic Compartments | Relative Connection Strength |

|---|---|

| {4, 5} | 5.4 |

| {4, 5, 6} | 7.18 |

| {4, 5, 6, 7} | 8.52 |

| {3, 4, 5, 6, 7, 8} | 13.13 |

Network degree and network density metrics

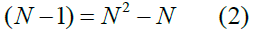

The typical formula for the total number of possible connections or edges in a network, network degree, is

which, visualizing the connectivity matrix Figure 1, is the number of rows times the number of columns, subtracting the one cell in each column on the main diagonal that represents nonexistent self-connections from a group to itself [35]. In our taxonomy of neural populations P and groups g, it is more transparent to multiply out Eq.1

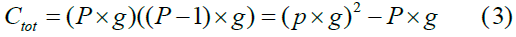

where P, the number of populations, P = N, and add the group size g in Eq. 2 to yield the total number of connections ctot, which is the population/ group cross-product in Fig. 1 minus the population/group blocks along the main diagonal:

Since, as mentioned, to keep the model simple but represent an approximate realistic network density in the brain, each neuron connects to 40% of all possible target neurons. Thus, network density is 40% in all experiments.

Experimental setup and neural simulation software

The effect of numbers of nodes and redundancy in connectivity between neural groups and the ‘robustness’ of the circuit and its output in terms of firing rates of the neurons as a whole is our focus. We explore robustness in neural circuits [1] using biologically-based modeling software that permits arbitrarily large numbers of unique neurons in terms of 10 membrane parameters and electrotonic dendritic connectivity, axonal projections to multiple synaptic locations with axonal delays, timesteps of 0.25 ms, and tracking of every synaptic event and membrane voltage at every time step [37,41]. With this more biologically-based representation of neural circuitry dynamics, we can rigorously control neuron numbers, connectivity, balance excitation and inhibition, and numbers of synapses as we manipulate the parameter space and sweep through a variety of sensitivity analyses. We use this ability to examine more closely the transition from less robust to more robust states and the relationship to the complexity of the network.

We prepared a baseline circuit as a configuration of 10-parameters and topology in the Universal Neural Circuitry Simulator (UNCuS) [37]. Models contained 2 - 9 populations, each containing one excitatory and one inhibitory neural group. Therefore, we examined fully interconnected circuits of balanced excitation and inhibition. To aid transparency and avoid confounding effects, parameters in only population #2 were varied and the firing rate of only one population, #1, was used to gauge variation of the network response.

Circuit Structure and Complexity Metrics

Connectivity parameters and calculations: Let us consider the connections between neurons in any two groups within the network. Each connection is one-way, commonly called a projection, and in the neural simulator there is only a single projection between any two groups, but each projection can connect any one neuron to any number of neurons in the target group (as a real axon might make several synapses on multiple target cells). The total number of axonal projections in the network then is:

Where,

p = number of populations in the network; gtot = total number of groups in the network, and gtot = 2 x p.

The connection ‘density’ of all cells in each group in the network is 40%, i.e., a projection consists of a pre-synaptic neuron randomly connecting to 40% post-synaptic neurons in the target group. Thus:

Where,

ccpost = number of connected target cells for a pre-synaptic cell and cpost = number of post-synaptic cells. Each projection contains connections = g size × ccpost, yielding a total number of connections in the network:

The synapses of each cell are made on an electrotonic space of 10 compartments that are, in terms of electrical resistance and therefore the current they send to the cell body when stimulated, able to simulate any arbitrary passive-resistance dendritic configuration [37,40], and numbered 1 - 10 from strongest to weakest effect on the axon hillock area of the cell.

Moreover, synapses further away, electrotonically, rise to a lower current amplitude but have a longer effect in time, whereas those closer to the cell body or axon hillock, electrotonically, have a much higher amplitude effect, but last a shorter time. Such dynamic dendritic processing provides a much richer representation of real dendritic processing than most neural network models and neural node models that use integrate-and-fire equations. If the number of dendritic compartments contacted in each target cell is 6 in all groups, for instance, then the total number of synapses in the entire network is given by:

To calculate the total complexity in the network incorporating that of all systems levels including number of cells, number of projections, and number of synapses, - the “combined complexity” C - is given by:

Where ctot = total number of cells in the network, i.e., ctot = gtot X g size.

Limitation: Randomized, non-clustered networks

All networks were randomized and homogenously connected, therefore, there were no ‘clusters’ i.e., highly connected nodes whose behavior might have a stronger effect on the rest of the network relative to that of other nodes. Connection density was uniform throughout the entire network and therefore a limited factor in examining local effects on a single group of neurons.

Metrics

Firing Rate (FR): Firing rate (FR) of each individual neuron, as it differs from a baseline to a value when the network is perturbed, is the single measure used in the two metrics describing network robustness. The momentary firing rate FR(t), where t is a given 0.25 ms time step, is calculated by averaging the number of spikes in the trailing 100 ms-period over all cells in a group:

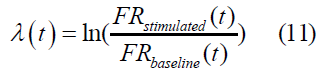

Robustness Metrics: Coefficient of Variance (CV) and Lyapunov Exponent (λ): Two measures of ‘robustness’ were used in this study, a variance metric, coefficient of variance (CV), and a type of Lyapunov exponent λ [42-47].

Coefficient of Variance (CV): The formula we used for coefficient of variance is given by:

Where σ is standard deviation; the middle expression shows that CV is a variance measure that uses some value of a baseline case as its unit, which in our study is the firing rate, FR. Intuitively, the smaller the variance CV from the baseline firing rate FRb of each network, the more robust the network and its tendency to maintain a ‘healthy’ state (see Discussion). In dynamical systems, intuitively, a Lyapunov exponent measures how rapidly two trajectories diverge from initial conditions in phase space. ‘Chaotic’ systems are defined as those that diverge rapidly from close initial points in the phase space. In this sense, robustness, as used herein, intuitively means less divergence between two transitions in phase space when their initial conditions are tweaked.

Lyapunov Exponent (λ): The formula we used for Lyapunov exponent is given as:

Measuring the critical point of a homeostatic healthy state vs. diseased state

Curve-fitting: The best-fit function (ExpDec1, OriginLab, Northampton, MA, USA) for the data was:

(12)

(12)

For which fitting coefficients are given in Table 2.

Fitting function:

|

y0 | A | τ | R2 | CV at critical point of minimum curvature |

|---|---|---|---|---|---|

| Combined Complexity | 0.25686 | 67.43311 | 1.25237 | 0.35427 | 5.42612 |

| Total Synapses | 0.2551 | 38.75914 | 0.59206 | 0.33951 | 2.68092 |

| Total Projections | 0.25481 | 31.66717 | 0.51084 | 0.35925 | 2.28526 |

| Total Cells | 0.26574 | 2.12836 | 16.9919 | 0.3232 | 1.64432 |

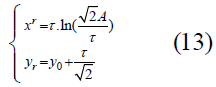

Identifying the transition toward greater stability: A question to consider with these curves, which can be interpreted as the transition from instability to robustness or to a homeostatic healthy state from a diseased or disordered state, is: At what point does such a transition take place? One method to determine this transition point is to use the point of maximum curvature, which is equivalent to the maximum speed of rotation of the tangent of the curve, as the critical transition point of the best-fit function. The procedure is then to find the minimum radius of curvature along the fitted curve. This point is located at:

for which the derivation is given in the Supplement.

Analysis of increasing perturbation to disrupt stability: We also examined how much perturbation might be needed to move an otherwise robust network out of stability. With nine fully-interconnected populations of neurons at the highest connectivity (i.e., the most complex circuit), we manipulated parameters of the neurons in increasing numbers of populations and measured the CV to determine how much perturbation might disrupt the overall stability of the firing rate of neurons in group one.

Sensitivity testing

There are 10 neural cell membrane parameters used in UNCuS and each parameter has a fixed default value (Table S3) with slight jitter to make each cell unique. Running a given network with the default set reproduces a set of calibration data. To test sensitivity of a neural circuit to each parameter, we set each parameter to its default value as its baseline and assign random values to all other parameters within an assigned range (Table S3). This was done to assure that we had considered whether one or another parameter had more effect on circuit perturbation. When using firing rate FR as sensitivity metric, the FR variance, Vij, of a sample set was calculated as:

Where FRb is the firing rate with all 10 cell parameters are at their baseline values normalized by setting it equal to 1, i is the index of the randomly-selected cell parameter and j is the index of the sample set. Then the sensitivity of the ith cell parameter using sample set j was calculated as:

Experiment descriptions

Experiment 1: Variation of cell parameters, numbers of populations and groups: In the experiments, several levels of complexity were varied in cell behavior parameters, numbers of cells/group. A baseline case was created by using the default cell parameters given in Table A3. Stimulation was run for 1 second and the firing rates and robustness metrics were calculated for population 1 only. Tables S5 and S6 summarize experiments 1 and 2. In the third experiment, we tested the sensitivity of robustness against changes in 10 individual neuron parameters. Sensitivity was defined as the system’s average firing rates’ distance from its firing rate with the baseline parameters. The baseline firing rate was set at 1 to normalize rates for comparison.

Experiment 1

Main result: Figure 3 shows that as complexity increases in circuits having different levels of complexity, the CV approaches an asymptotic value, (~0.26), and the most complex system has the slowest transition from a less robust to more robust state. The fitting parameter values are listed in Table 2 and coordinates of minimum curvature points are in Table 3. The CV of all four curves approaches an asymptote at ~0.26 (Eq. 9) instead of 0 because the deviation is relative to the baseline firing rate which itself is a function of the baseline parameter set of neuron parameters (Table S3). Importantly, however, is that as the systems become more complex, the CV reaches a fundamental lowest level compared to the baseline parameters. Looking at combined-complexity, robustness reaches its critical turning point at ~105.426 (266,686 total complexity in the circuit - neurons x projections x synapses), which corresponds to parameters of 2 populations with 5 cells in each group (Figure 3a). Of the four levels of complexity, the combined complexity radius of minimum curvature radius is greatest, (at value of 3.5), which implies that the transition from less to more robust is slower than in the less complex samples As complexity increases from total cells to total projections to total synapses to combined complexity, robustness, as measured by inverse of the coefficient of variation, increases (Table 2). The same data show that the more complex the system, the less rapid is its transition from less to more robust. Figure 4 shows a similar result as Figure 3, but for CV vs. network degree. Interestingly, as measured by the center of maximum curvature, the critical point toward robustness is 102.186, which is ~153 network connections. The fitting parameter values and coordinates of minimum curvature points are listed in Table 4.

Figure 3. Coefficient of Variance distribution over 640 sample points, relative to a baseline parameter set, with four complexity variables: (a) combined-complexity (Eq. 5: total # cells X total # connections X total # synapses), and broken out into (b) total projections, (c) total synapses, and (d) total cells. The red lines are fitting curves (see Table 2) and the red regions indicate 95% confidence bands. The red numbers are the coordinates on the curves where radius of curvatures of CV is at its minimum, which is a measure of the critical point in the transition from a less robust to a more robust system. The blue numbers are the coordinate of the center of the minimum radius circles. The line from the center of minimal curvature and the critical point on the curve are shown in green. Note that the region of most rapid change along a curve can be difficult to compare across differently-scaled graphs, as shown in the figure. The fitting parameter values are listed in Table 1 and coordinates of minimum curvature points are in Table 2.

Figure 4. Coefficient of Variance distribution over 640 sample points relative to a baseline parameter set versus network degree (cf. Fig. 3). As measured by the radius of maximum curvature, the critical transition to robustness is 102.186 which is ~153 connections in the network. The fitting parameter values and coordinates of minimum curvature points are listed in Table 4.

| Parameter | Critical Point xr | Critical Point yr | Curvature Center xc | Curvature Center yc | Curvature Radius |

|---|---|---|---|---|---|

| Combined Complexity | 5.426 | 1.142 | 6.992 | 4.273 | 3.5 |

| Total Synapses | 2.681 | 0.674 | 3.421 | 2.154 | 1.655 |

| Total Projections | 2.285 | 0.616 | 2.924 | 1.893 | 1.428 |

| Total Cells | 1.644 | 0.408 | 1.904 | 0.927 | 0.58 |

| Model (OriginLab) | ExpDec1 |

|---|---|

| Equation | y = A1*exp(-x/t1) + y0 |

| Plot | Coefficient of Variance |

| y0 | 0.27871 ± 0.00768 |

| A1 | 201523.26818 ± 559170.83636 |

| t1 | 0.15131 ± 0.03259 |

| Reduced Chi-Sqr | 0.02312 |

Experiment 2

Main result: When the number of perturbed populations increases, the asymptote of robustness y0 increases as measured by average Lyapunov exponent, due to greater variability in the parameter space, while in all cases robustness increased with complexity Figure 5. The transition from less robust to more robust behavior can be seen along the perturbed population axis.

Figure 5. Coefficient of Variance vs. Combined Complexity and Number of Perturbed Populations. As the number of perturbed populations increases from 1 through 8, the CV increases as well due to the higher proportion of perturbed elements in the system. While as in Fig. 3, CV decreases significantly with increased system complexity. The 3D plot provides a perspective on the transition from less robust to more robust behavior along the Disturbed Populations axis

To examine how perturbation affects network stability, we set up 8 different stimulating population cases among 9 populations, using firing rate FR(t) in population 1 as the degree of perturbation and perturbations of cell parameters in successive subsets of populations 2-9 as the perturbation source (i.e., population 2, then populations 2 & 3, etc. up to populations 2-9). All other variables in the experiment are the same as in Experiment 1. The eight population perturbation runs are shown in Figure 5.

Figures 6 and 7 show robustness in the same setup using a different measure of stability, average Lyapunov Exponent (ALE). This measure, more closely related to typical LE analyses of dynamical systems to determine how quickly they diverge or converge as a measure of stability, shows that the dynamic ALE not only rapidly settles to the low positive value (~0.1) as complexity increases, but the standard deviation of its value decreases significantly as complexity increases. These findings are consistent with the changes seen at similar complexity values with CV measures. However, Fig. 6 shows that as ALE values are separated out for each level of complexity, it reveals itself to be less sensitive as a measure of robustness than CV in firing rate.

Figure 6. Average Lyapunov Exponent (ALE) Distribution with Combined Complexity. The LE λ(t) of each complexity configuration is calculated at each time step and then averaged over time: λ = λ(t)/TotalSteps. Panel a shows ALE of 552 individual Combined Complexity configurations. The red line is a curve-fit using y = y_0+A∙e^(x/τ) (ExpGro1, OriginLab). Panel b is the standard deviation of ALE over combined complexity, indicating increased robustness with increased complexity. The red line is a fit using y = y_0+A∙e^(-x/τ) (ExpDec1, OriginLab). Panels c and d are ALE distributions vs. complexity (x-axis set ID# indicates the run# of 50 sets of randomized 10-parameters in 2- and 9-population cases). Black squares indicate 5-cell group size, red indicates 150-cell group size. Robustness is significantly greater in the more complex 9-population setup than in the less complex 2-population setup.

Figure 7. Average Lyapunov Exponent (ALE) Distribution vs. Combined Complexity and Number of perturbed groups. The vertical line through each point shows standard deviation (SD). The 80 points in each panel are fitted with an exponential function ExpDec1 (black curves). Their asymptotic limits increase with number of perturbed populations varying from 1 at top left to 8 at bottom right: (1) 0.11233; (2) 0.11821; (3) 0.11829; (4) 0.11428; (5) 0.11588; (6) 0.113; (7) 0.1875; (8) 0.15582.

Experiment 3

Main result: Sensitivity was defined as the system’s average firing rates’ distance from its firing rate with baseline parameters, and that rate was set at 1 to normalize the plot (Eqs.13, 14 and 15). The network’s sensitivity to neuron parameter changes did not change with increased complexity Figure 8. Not unexpectedly, since we used firing rate as a metric for robustness, results were most sensitive to the baseline cell threshold, STh, than to any other parameter, since threshold directly modulates firing rate (firing rate is inversely proportional to threshold). Extensive examinations of the cell parameter space [48] reveal numerous stable attractors that are known as different types of neurons, which the nervous system has evolved to perform specific functions.

Figure 8. Sensitivity of Individual Cell Parameters (Supplement Table S3). Shown are 50 combined complexities and 50 sets of random values of the 10 cell parameters for each combined complexity. Sensitivity is measured by the system’s average firing rate’s distance from the firing rate when all cell parameters are at baseline values (baseline firing rate is set to 1 to normalize the plot values; points below 1 indicate firing rate below baseline). Data fits are linear (color-coded for each cell parameter), indicating that, while there exists significant sensitivity in the runs within a given level of complexity, average parameter sensitivity did not vary much with system complexity. The most sensitive parameter is threshold (Sth), not unexpectedly since we measure sensitivity by firing rate, which is indirectly proportional to threshold.

Main results

Highly inter-connected and recurrent neural circuits throughout the CNS must be able to be perturbed by constant stimuli such as sensory input, to tolerate component failure, and yet be able to maintain function from an information processing standpoint, related primarily to firing patterns [45,49-51]. The main result of the first two experiments is that, given neural circuitry with a basic excitatory/inhibitory balance to remove that obvious known stability pre-requisite, robustness can be enhanced by increasing system complexity beyond a critical point [17,52].

Conversely, recurrence can be a source of instability and fragility when functioning as positive feedback but a source of stability and robustness when functioning as negative feedback [38,53-55]. Inherent ‘noise’ levels in a neural circuit may also be mechanisms of maintaining robustness [56], and the work herein shows that robustness against noise can emerge with increased complexity. These results may not apply to small, highly specific circuits [20]. But on the other hand, medium- to large-scale connectome models may require an inherent degree of complexity, visualized on the plots as a corner point at the minimum radius of curvature, to perform a variety of behaviors (input-output specifications) with character that is robustly like the actual system being portrayed. One conclusion that may apply to the evolution of neural circuits and more broadly to developing control systems or homeostatic systems is that, once a certain degree of complexity is in place, the system can evolve and perform robustly enough to contribute to a positive evolutionarilyselective force.

Addressing criticism of biological models

A criticism leveled against modeling in neural circuitry function has been that because all the neuron, ion channel, membrane parameter, transmitter, and ultrastructural components are typically not perfectly known, parameter wiggle room can be used to manipulate model behavior. One answer to this criticism is that as the system grows in complexity, the variation in any one parameter, or even several parameter values, may have diminishing impact on the overall stability and resulting system dynamics. Thus, manipulating individual parameters in localized parts of a circuit to bias it toward a desired outcome is unlikely to be effective unless significant numbers of parameters are changed in several areas or non-biological changes are made in some individual parameters.

As a corollary, when some parameters in a simulation are not precisely known but are estimated within a biologically reasonable range, the output of the circuit may nonetheless be similar to output when those parameters are precisely known And when modeling a nonlinear system with wide parameter ranges or ‘sloppy’ parameters, increasing model complexity may be a technique to help produce falsifiable predictions [57-59].

Rationale for using firing rate as the measure of variance

A significant advance on Bernard and Cannon’s homeostasis concept was made by the mathematician Norbert Wiener, who made homeostasis the centerpiece of his version of control theory, cybernetics. Cybernetics was conceived to bridge the gap between biological systems and autonomous systems that he envisioned would eventually lead to artificial general intelligence [60,61]. From a different perspective, functional connectivity is defined as correlation of activity between neural groups. “Activity” is measured in different ways that are all proxies for firing rates. Functional connectivity has grown in importance as a way to establish a baseline healthy state via the brain’s resting state functional connectivity, deviation from the healthy state, and restoration toward the healthy state by a given intervention [32,62-81].

This study attempted to analyze the connectivity complexity in simplified, generic neural circuits. In the brain or spinal cord of most complex organisms, there are specific circuits that enable the enormous range of behaviors seen and any isolated part of that circuitry is not totally balanced by excitation and inhibition, numbers of synapses, and so forth. While we have tried to eliminate such connectivity as a factor in studying whether complexity per se creates robustness, we may have missed the transition points, earlier or later, in whether certain smaller or differently connected circuits could achieve robustness.

Moreover, disorders of neural circuitry physiology occur, such as seizure or movement disorder. Although such states could be seen as dysfunctional ‘stable attractor states’ of the system, they likely represent situations where cell parameter changes have strayed outside of a range we have considered. We did not attempt to account for diseased states categorized in terms of dynamical systems theory. A large part of the maintenance of membrane, synaptic, and circuitry parameters in real nervous systems are done by glia. Glia outnumber neurons by 10-20:1, communicate with each other and with neurons by gap junctions, and modulate neurotransmitters and other molecules affecting neural activity. Glia are a buffer for eliminating perturbed parameters and contribute to circuit robustness, but whose functions were not separated out in our study.

An analysis of biologically-based neural circuitry models, balanced in basic dimensions such as inhibition and excitation, shows that, with increasing complexity in numbers of elements, these systems become more robust and less sensitive to one or more parameter values. An evolutionary strategy to achieve robustness of desired behavior at multiple systems levels is to evolve component and connectivity properties that exceed critical values. These properties are independent of, and orthogonal to, redundancy alone and deserve study as a critical ingredient in biological homeostasis and the control theory of biological systems. As measured by the center of maximum curvature, the transition of the neural circuitry systems modeled was ~153 network connections (network degree).

Generous support for this work was provided by the Sydney Family Foundation. We thank K. Carlson for feedback and help with the manuscript.

JEA conceived and guided the simulations that LZ performed and from which the figures were selected. All authors researched the literature. K. Carlson helped to draft the paper, to which all authors contributed and approved.

The authors declare no competing interests.

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, CrossrefIndexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google ScholarCrossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Journal of Computer Science & Systems Biology received 2279 citations as per Google Scholar report